Web scraping is an essential tool for data-driven industries, enabling businesses to gather valuable insights from online sources. However, the process of extracting data from websites can be time-consuming and challenging, especially when dealing with large volumes of data and dynamic content. In this blog post, we will explore essential techniques and best practices to boost your web scraping speed, helping you optimize your data collection efforts and achieve faster results.

Web scraping has become an indispensable tool for businesses and individuals looking to extract valuable data from websites. Whether you're gathering market intelligence, monitoring competitor prices, or collecting data for research purposes, web scraping enables you to automate the process and save time. However, as websites grow more complex and the volume of data increases, the speed of your web scraping operations becomes crucial.

Slow web scraping can lead to several issues, such as increased server load, IP blocking, and inefficient use of resources. By optimizing your web scraping techniques and adopting best practices, you can significantly boost your scraping speed and achieve faster data collection. In this blog post, we will explore essential techniques and strategies to help you streamline your web scraping process and maximize efficiency.

We'll cover topics such as:

By implementing these techniques and best practices, you'll be able to extract data from websites more efficiently, reduce the risk of being blocked, and ultimately save time and resources in your web scraping endeavors. Let's dive in and explore how you can boost your web scraping speed!

Web scraping is the process of extracting data from websites automatically using specialized software or scripts. It involves retrieving the HTML content of a web page, parsing it, and extracting the desired information. Web scraping has become increasingly popular in data-driven industries, such as e-commerce, market research, and data analytics, as it enables businesses to gather valuable insights and make data-informed decisions.

The usefulness of web scraping lies in its ability to:

However, web scraping also comes with its own set of challenges. Some common issues faced during web scraping include:

To overcome these challenges, web scraping practitioners employ various techniques and best practices, such as using headless browsers, implementing delays between requests, and utilizing proxy servers to avoid IP blocking. Additionally, tools like Selenium and Puppeteer can help handle dynamic content and simulate user interactions.

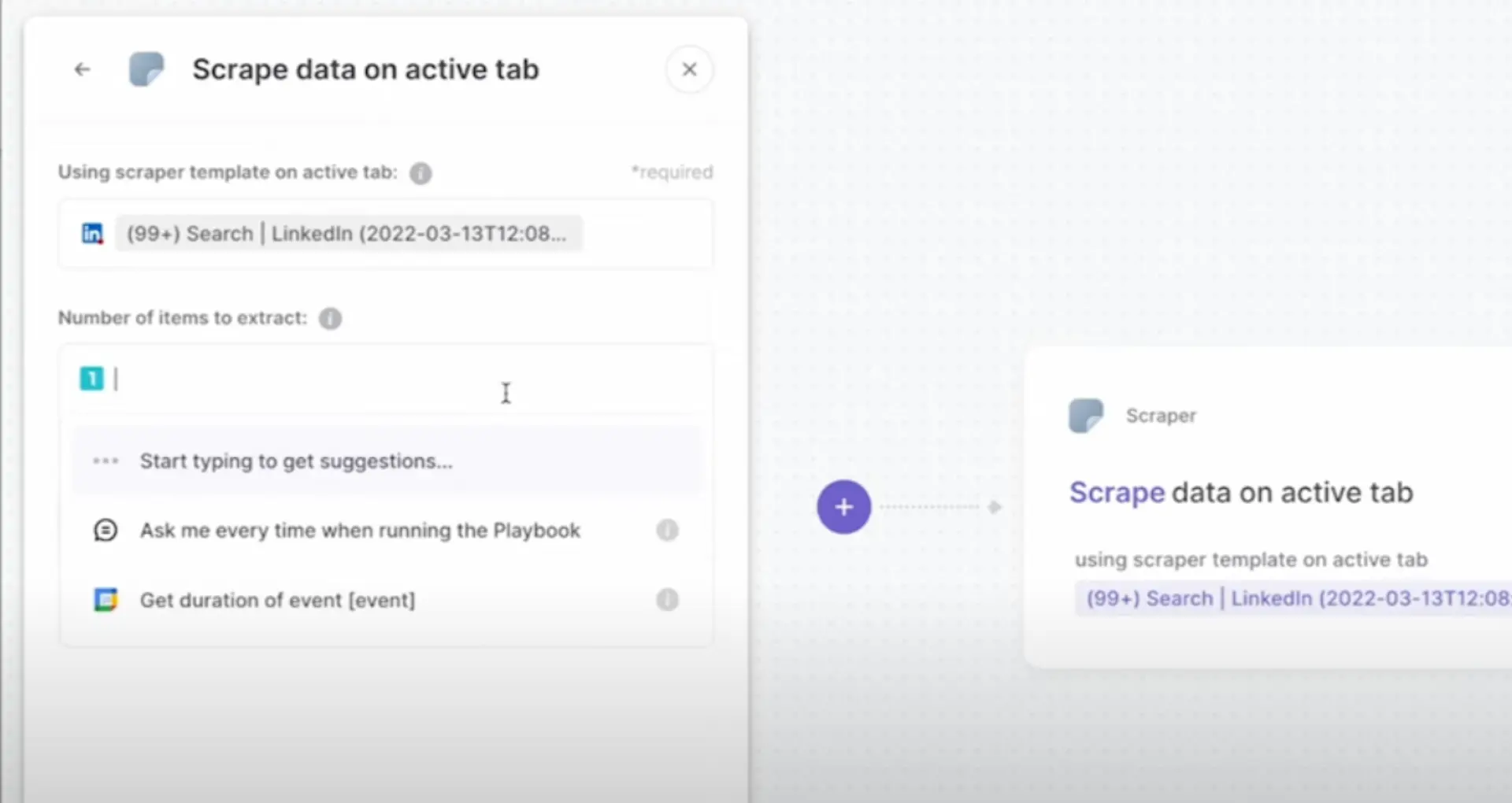

Use Bardeen's web scraper to collect data effortlessly. Automate routine scraping tasks and save valuable time with our no-code tool.

Understanding the basics of web scraping is crucial for anyone looking to harness the power of data from websites. By being aware of the challenges and adopting the right techniques, you can effectively scrape data while respecting website terms of service and ethical guidelines.

Multithreading is a technique that allows multiple tasks to be executed concurrently within a single program. By leveraging multithreading in web scraping, you can significantly improve the performance and speed of your scraping operations. Instead of sequentially processing each web page, multithreading enables you to handle multiple pages simultaneously, reducing the overall execution time.

Here's how multithreading can be applied to web scraping:

Here's a basic example of how multithreading can be implemented in Python for faster web scraping:

import threading

from queue import Queue

def scrape_page(url):

# Scraping logic goes here

# ...

def worker():

while True:

url = url_queue.get()

scrape_page(url)

url_queue.task_done()

url_queue = Queue()

num_threads = 4

for i in range(num_threads):

t = threading.Thread(target=worker)

t.daemon = True

t.start()

# Add URLs to the queue

for url in urls:

url_queue.put(url)

url_queue.join()

In this example:

scrape_page function that contains the scraping logic for a single web page.worker function acts as the task executor for each thread. It continuously retrieves URLs from the queue, scrapes the corresponding web page, and marks the task as done.Queue to store the URLs that need to be scraped.num_threads variable, and each thread executes the worker function.url_queue.join().By distributing the scraping tasks across multiple threads, you can achieve parallel processing and significantly reduce the overall scraping time. However, it's important to note that the actual performance gain depends on factors such as the number of available cores, network latency, and the website's response time.

Multithreading is particularly useful when the majority of the scraping time is spent waiting for I/O operations, such as network requests. By allowing other threads to continue execution while waiting for a response, you can optimize resource utilization and improve scraping efficiency.

While multithreading is effective for I/O-bound tasks, multiprocessing is the preferred approach when it comes to CPU-bound operations like web scraping without code. Multiprocessing allows you to utilize multiple CPU cores, enabling true parallel execution of scraping tasks.

Here are the key differences between multithreading and multiprocessing:

To set up a multiprocessing environment in Python for faster web scraping, follow these steps:

from multiprocessing import Pool

import requests

from bs4 import BeautifulSoup

def scrape_page(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract desired data from the parsed HTML

# ...

pool = Pool(processes=4) # Adjust the number of processes based on your systemurls = [...] # List of URLs to scrapemap function to distribute the scraping tasks among the worker processes:results = pool.map(scrape_page, urls)pool.close()

pool.join()

results list.By leveraging multiprocessing, you can significantly speed up your web scraping tasks, especially when dealing with a large number of URLs. The scraping workload is distributed among multiple processes, allowing for parallel execution and efficient utilization of system resources.

However, it's important to note that using multiprocessing may put a higher load on the target website, so it's crucial to be mindful of the website's terms of service and implement appropriate throttling mechanisms to avoid overloading the server.

Save time with the Bardeen scraper. Automate routine scraping tasks without coding.

Asynchronous programming is a powerful approach that can significantly boost the performance of web scraping operations. By leveraging Python's asyncio library and the aiohttp package, you can efficiently scrape multiple web pages concurrently, reducing the overall execution time.

Here's a step-by-step guide on setting up an asynchronous web scraping script using Python's asyncio and aiohttp:

pip install aiohttp beautifulsoup4import asyncio

import aiohttp

from bs4 import BeautifulSoup

async def fetch_html(session, url):

async with session.get(url) as response:

return await response.text()

async def parse_html(html):

soup = BeautifulSoup(html, 'html.parser')

# Extract desired data from the parsed HTML

# ...

async def main():

async with aiohttp.ClientSession() as session:

urls = [...] # List of URLs to scrape

tasks = []

for url in urls:

task = asyncio.ensure_future(fetch_html(session, url))

tasks.append(task)

htmls = await asyncio.gather(*tasks)

for html in htmls:

await parse_html(html)

asyncio.run(main())By utilizing asyncio and aiohttp, you can send multiple requests concurrently without waiting for each request to complete before moving on to the next. This asynchronous approach allows for efficient utilization of system resources and significantly reduces the overall scraping time.

Keep in mind that while asynchronous scraping can greatly improve performance, it's important to be mindful of the target website's terms of service and implement appropriate rate limiting and throttling mechanisms to avoid overloading the server.

When scraping websites at scale, using a single IP address can quickly lead to getting blocked. To avoid this, implementing proxy rotation and proper session management is crucial. Here are some key strategies:

By effectively managing your proxy pool and sessions, you can significantly reduce the chances of getting blocked while scraping websites. However, it's important to respect website terms of service and adhere to ethical scraping practices.

Some popular tools and libraries that can help with proxy rotation and session management include:

requests library with proxy supportImplementing proxy rotation and session management requires careful planning and ongoing monitoring to ensure the success and reliability of your web scraping projects. By utilizing these techniques effectively, you can enhance your scraping performance and gather data more efficiently.

Save time with the Bardeen scraper. Automate routine scraping tasks without coding.

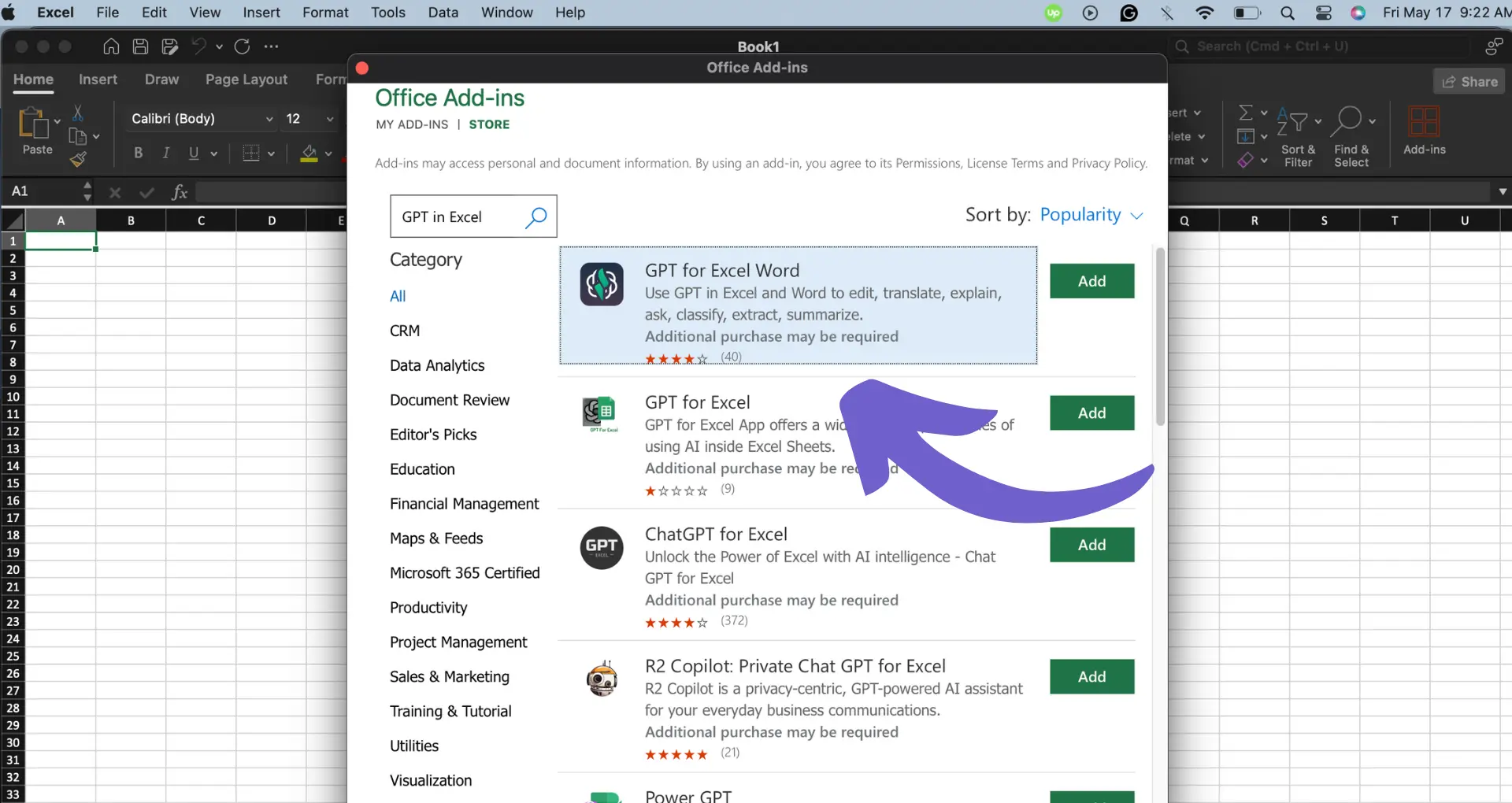

Numerous tools and frameworks are available to simplify the web scraping process, each with unique features and capabilities. Here are some popular options for web scraping:

In addition to these frameworks, there are cloud-based scraping solutions and tools that provide user-friendly interfaces and require minimal coding:

When choosing a web scraping tool or framework, consider factors such as ease of use, scalability, and the specific requirements of your scraping project. It's also essential to be mindful of the legal and ethical aspects of web scraping and ensure compliance with website terms of service.

SOC 2 Type II, GDPR and CASA Tier 2 and 3 certified — so you can automate with confidence at any scale.

Bardeen is an automation and workflow platform designed to help GTM teams eliminate manual tasks and streamline processes. It connects and integrates with your favorite tools, enabling you to automate repetitive workflows, manage data across systems, and enhance collaboration.

Bardeen acts as a bridge to enhance and automate workflows. It can reduce your reliance on tools focused on data entry and CRM updating, lead generation and outreach, reporting and analytics, and communication and follow-ups.

Bardeen is ideal for GTM teams across various roles including Sales (SDRs, AEs), Customer Success (CSMs), Revenue Operations, Sales Engineering, and Sales Leadership.

Bardeen integrates broadly with CRMs, communication platforms, lead generation tools, project and task management tools, and customer success tools. These integrations connect workflows and ensure data flows smoothly across systems.

Bardeen supports a wide variety of use cases across different teams, such as:

Sales: Automating lead discovery, enrichment and outreach sequences. Tracking account activity and nurturing target accounts.

Customer Success: Preparing for customer meetings, analyzing engagement metrics, and managing renewals.

Revenue Operations: Monitoring lead status, ensuring data accuracy, and generating detailed activity summaries.

Sales Leadership: Creating competitive analysis reports, monitoring pipeline health, and generating daily/weekly team performance summaries.