In today's fast-paced real estate market, staying ahead of the competition is crucial for success. Web scraping Realtor.com can give you a significant advantage by providing access to valuable data and insights that can help you generate more leads and close more deals. By mastering this skill in 2024, you'll be well-positioned to thrive in the ever-evolving real estate landscape.

Imagine having the ability to quickly and efficiently gather property details, pricing information, and contact details for potential clients. With web scraping, you can save countless hours of manual research and focus on what matters most: connecting with leads and growing your business.

In this comprehensive guide, we'll take you through the process of web scraping Realtor.com step by step. We'll cover both the traditional manual methods and the cutting-edge automated techniques using powerful tools like Bardeen. By the end of this guide, you'll have all the knowledge and skills you need to start leveraging web scraper extensions for your real estate business.

So, what are you waiting for? Get ready to dive in and discover how web scraping can revolutionize your lead generation strategy. With the insights and tactics you'll learn here, you'll be well on your way to becoming a real estate rockstar in no time!

Before diving into the technical aspects of web scraping Realtor.com, it's crucial to understand the legal and ethical considerations involved. While the data on Realtor.com is publicly accessible, it's essential to respect their terms of service and ensure that your scraping activities don't infringe on privacy rights or cause harm to the website's functionality.

Realtor.com has specific terms of service that outline acceptable use of their website. It's important to carefully review these terms and ensure that your web scraping practices align with their guidelines. Failure to comply with their terms may result in legal consequences, such as cease and desist orders or even legal action.

For example, Realtor.com may prohibit the use of automated tools to access their website or limit the frequency of requests made to their servers. They may also have restrictions on the commercial use of their data. By familiarizing yourself with their terms of service, you can avoid unintentional violations and ensure a smooth web scraping experience.

When scraping data from Realtor.com, it's crucial to handle the information responsibly and ethically. The data you collect may contain personal information, such as names, addresses, and contact details of property owners or real estate agents. It's your responsibility to ensure that this data is not misused or shared without proper consent.

Consider implementing data anonymization techniques to protect individual privacy. This may involve removing or encrypting personally identifiable information before storing or using the scraped data. Additionally, be transparent about your data collection practices and provide clear information about how the data will be used and who will have access to it.

By prioritizing ethical data usage and respecting privacy rights, you can build trust with your audience and avoid potential legal and reputational risks associated with mishandling scraped data.

Understanding the legal and ethical aspects of web scraping Realtor.com is essential for conducting your scraping activities responsibly. By adhering to their terms of service and handling data ethically, you can focus on extracting valuable insights while minimizing risks. In the following section, we'll explore the technical setup required to start scraping Realtor.com effectively.

Using Bardeen's playbook for Realtor.com search results, you can simplify your web scraping efforts. Save time and focus on important tasks with just one click.

Before you can start extracting data from Realtor.com, it's essential to have the right tools and software in place. Python, along with libraries like Selenium and Undetected ChromeDriver, provides a powerful foundation for building your web scraping environment.

To begin your web scraping journey, you'll need Python installed on your computer. Python is a versatile programming language that offers a wide range of libraries specifically designed for web scraping tasks. Additionally, you'll require Selenium, a browser automation tool that allows you to interact with websites programmatically. Selenium works in conjunction with a web driver, such as ChromeDriver, to control the browser.

Another crucial component is Undetected ChromeDriver, a library that helps prevent websites from detecting your automated scraping activities. By using AI web scraping tools, you can minimize the risk of your scraper being blocked or banned by Realtor.com's anti-scraping measures.

To set up your development environment for web scraping, follow these steps:

pip install seleniumpip install undetected-chromedriverWith Python, Selenium, Undetected ChromeDriver, and ChromeDriver installed and configured, you're ready to start building your web scraper for Realtor.com. These tools provide a solid foundation for automating the data extraction process and handling the complexities of web scraping.

Setting up your web scraping environment correctly is crucial for successful data extraction from Realtor.com. With the right tools in place, you'll be well-equipped to navigate the website, handle dynamic content, and extract the desired real estate data efficiently. In the next section, we'll dive into the process of extracting listing data from Realtor.com and explore the techniques for parsing and storing the scraped information.

With your web scraping environment set up, it's time to dive into the process of extracting key data points from property listings on Realtor.com. By leveraging the power of Selenium, you can navigate the website's structure and retrieve valuable information such as price, address, and property features.

To extract data from Realtor.com listings, you need to identify the specific elements that contain the desired information. Start by inspecting the HTML structure of a typical listing page using your browser's developer tools. Look for HTML tags, classes, or IDs that consistently wrap around the data points you want to scrape.

For example, you might find that the listing price is always contained within a <span> element with a specific class name, while the address is located in a <div> with a unique ID. By identifying these patterns, you can create targeted CSS selectors or XPath expressions to locate and extract the data reliably.

Selenium provides a powerful set of tools for automating web browsers and interacting with web pages. By using Selenium's WebDriver, you can programmatically navigate to Realtor.com, click on links, fill out forms, and extract data from the rendered HTML.

To parse the website's HTML structure, you can use Selenium's built-in methods like find_element_by_css_selector() or find_elements_by_xpath(). These methods allow you to locate specific elements on the page based on the CSS selectors or XPath expressions you identified earlier.

Once you have located the desired elements, you can extract the text content, attribute values, or even interact with the elements (e.g., clicking a button or filling out a form) using Selenium's methods. This flexibility enables you to handle dynamic content and navigate through multiple pages if necessary.

Using Bardeen's playbook for Realtor.com search results, you can simplify your web scraping efforts. Save time and focus on important tasks with just one click.

Extracting listing data from Realtor.com requires careful analysis of the website's HTML structure and the use of Selenium to navigate and parse the data effectively. By targeting specific elements and leveraging Selenium's capabilities, you can retrieve valuable information from property listings programmatically. In the next section, we'll explore how to automate the data collection process by handling pagination and iterating through multiple pages of listings.

Web scraping often involves dealing with pagination, as websites spread their data across multiple pages. To collect comprehensive data, it's crucial to automate the process of navigating through these pages. By handling pagination effectively, you can ensure that your web scraper captures all the relevant information without missing any important details.

Realtor.com implements pagination to display its property listings in a user-friendly manner. Each page typically contains a limited number of listings, and users can navigate to subsequent pages using pagination links or buttons. To automate data collection, you need to identify the pagination pattern and determine how to programmatically interact with it.

Take a close look at the URL structure as you navigate through the pages. Often, pagination URLs follow a specific pattern, such as incrementing page numbers or using query parameters. Understanding this pattern will help you construct the URLs for each page dynamically in your web scraping script.

Selenium is a powerful tool for automating web browsers and interacting with web pages. It allows you to simulate user actions, such as clicking on pagination links or buttons, to navigate through the pages seamlessly.

To automate pagination with Selenium, you can use methods like find_element_by_css_selector() or find_element_by_xpath() to locate the pagination elements on the page. Once you have identified the elements, you can use Selenium's click() method to simulate a click and load the next page.

Here's an example of how you can automate pagination using Selenium in Python:

from selenium import webdriver from selenium.webdriver.common.by import By driver = webdriver.Chrome() driver.get("https://www.realtor.com/realestateandhomes-search/New-York_NY") while True: # Extract data from the current page listings = driver.find_elements(By.CSS_SELECTOR, ".component_property-card") for listing in listings: # Extract relevant data from each listing ... # Check if there is a next page button next_button = driver.find_elements(By.CSS_SELECTOR, ".pagination .next") if next_button: next_button[0].click() else: break driver.quit()

In this example, the script uses Selenium to navigate to the initial search results page on Realtor.com. It then enters a loop where it extracts data from the listings on the current page. After processing the listings, it checks for the presence of a "next" button. If the button is found, it simulates a click to load the next page. The loop continues until there are no more pages to navigate.

Automating pagination with Selenium allows you to handle dynamic content and interact with the website as a user would, enabling you to collect data from multiple pages effortlessly. By leveraging the power of Selenium, you can build robust web scrapers that can handle pagination and extract comprehensive data from Realtor.com.

Pagination handling is a critical aspect of web scraping, especially when dealing with large datasets spread across multiple pages. By automating the process of navigating through pagination, you can ensure that your web scraper collects all the relevant data efficiently. In the next section, we'll explore how you can enhance your data extraction capabilities by leveraging ScrapingBee, a powerful web scraping tool.

ScrapingBee is a powerful tool that can help you overcome common challenges when web scraping Realtor.com. By integrating ScrapingBee into your scraping process, you can bypass anti-scraping mechanisms and ensure consistent data extraction. Additionally, automating the scraping workflow with Bardeen allows you to automate data extraction and store the extracted data directly in platforms like Google Sheets or Airtable.

Realtor.com employs various anti-scraping techniques to prevent automated data collection, such as CAPTCHAs and IP bans. These measures can hinder your scraping efforts and lead to incomplete or inconsistent data. ScrapingBee addresses these challenges by providing a robust infrastructure that handles CAPTCHAs and rotates IP addresses automatically.

By leveraging ScrapingBee's API, you can send your scraping requests through their servers, which handle the complexities of bypassing anti-scraping mechanisms. This ensures that your scraper can consistently extract data from Realtor.com without being blocked or encountering CAPTCHAs.

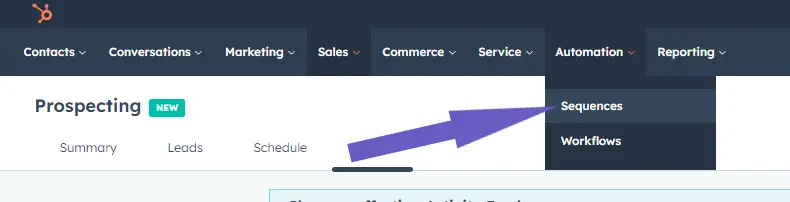

Bardeen is a powerful automation tool that can be seamlessly integrated with ScrapingBee to further enhance your web scraping workflow. With Bardeen, you can create automated workflows that trigger the scraping process, handle data extraction, and store the scraped data in your desired destination.

By combining ScrapingBee and Bardeen, you can automate the entire process of scraping Realtor.com. Bardeen can be configured to initiate the scraping job, pass the necessary parameters to ScrapingBee, and receive the extracted data. This automation eliminates the need for manual intervention and allows you to schedule regular scraping tasks.

Furthermore, Bardeen enables you to directly store the scraped data in platforms like Google Sheets or Airtable. By setting up the appropriate integrations, you can automatically populate spreadsheets or databases with the extracted real estate data. This streamlines your data collection process and ensures that the data is readily available for analysis and decision-making.

Using Bardeen's playbook for Realtor.com search results, you can simplify your web scraping efforts. Save time and focus on important tasks with just one click.

Additionally you may find the Bardeen's No code Scrape approach useful. Below is the tutorial for that.

Leveraging ScrapingBee and Bardeen together provides a powerful combination for enhanced data extraction from Realtor.com. By bypassing anti-scraping measures and automating the scraping workflow, you can consistently gather valuable real estate data at scale, saving time and effort in the process.

Knowing how to web scrape Realtor.com for real estate leads is crucial for staying competitive in the industry.

In this guide, you discovered:

With these insights, you're well-equipped to scrape Realtor.com like a pro. Happy scraping, and may your leads be plentiful!

SOC 2 Type II, GDPR and CASA Tier 2 and 3 certified — so you can automate with confidence at any scale.

Bardeen is an automation and workflow platform designed to help GTM teams eliminate manual tasks and streamline processes. It connects and integrates with your favorite tools, enabling you to automate repetitive workflows, manage data across systems, and enhance collaboration.

Bardeen acts as a bridge to enhance and automate workflows. It can reduce your reliance on tools focused on data entry and CRM updating, lead generation and outreach, reporting and analytics, and communication and follow-ups.

Bardeen is ideal for GTM teams across various roles including Sales (SDRs, AEs), Customer Success (CSMs), Revenue Operations, Sales Engineering, and Sales Leadership.

Bardeen integrates broadly with CRMs, communication platforms, lead generation tools, project and task management tools, and customer success tools. These integrations connect workflows and ensure data flows smoothly across systems.

Bardeen supports a wide variety of use cases across different teams, such as:

Sales: Automating lead discovery, enrichment and outreach sequences. Tracking account activity and nurturing target accounts.

Customer Success: Preparing for customer meetings, analyzing engagement metrics, and managing renewals.

Revenue Operations: Monitoring lead status, ensuring data accuracy, and generating detailed activity summaries.

Sales Leadership: Creating competitive analysis reports, monitoring pipeline health, and generating daily/weekly team performance summaries.