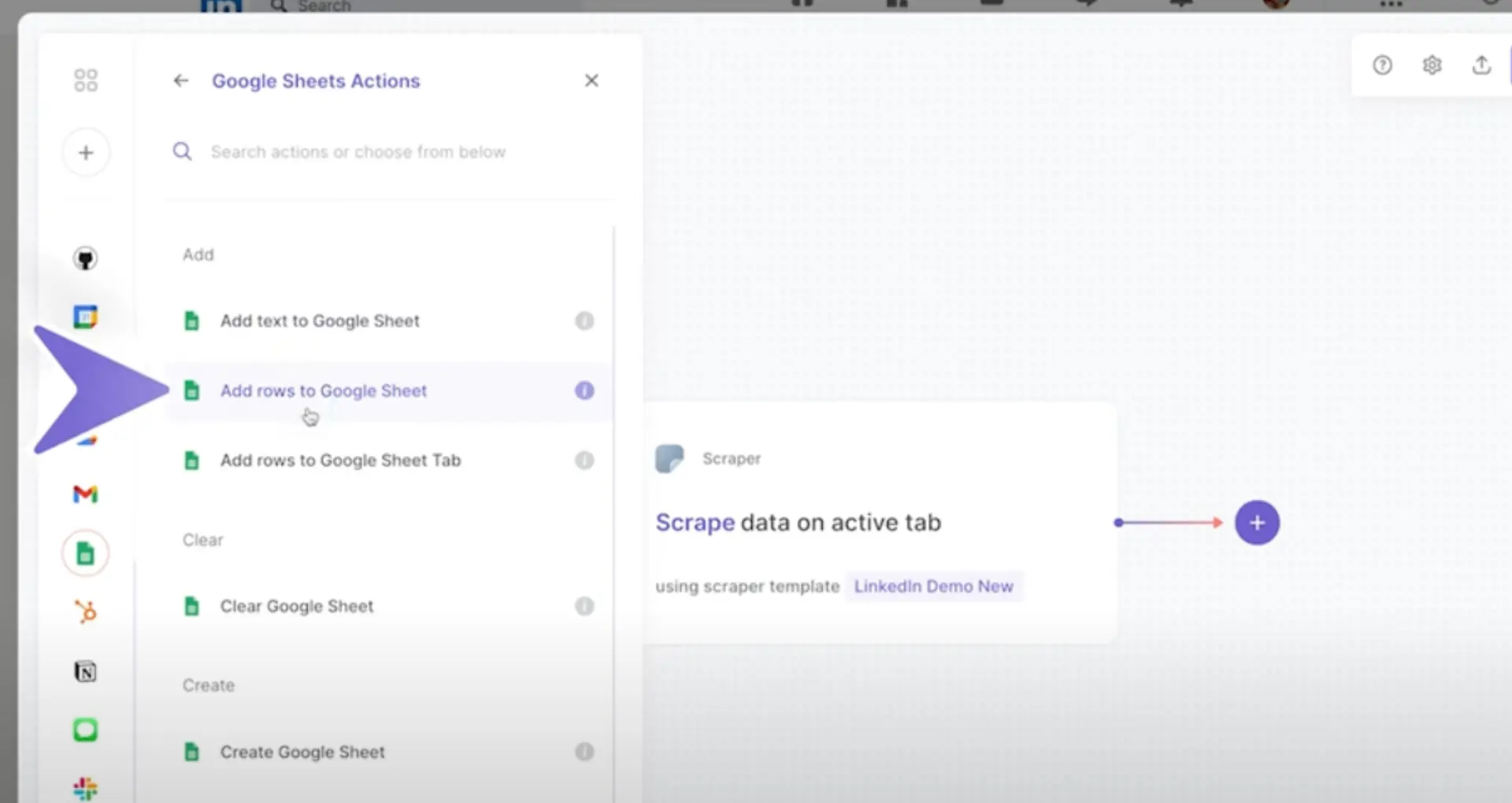

Use tools like Bardeen to automate LinkedIn post scraping.

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

If you're interested in LinkedIn data, our LinkedIn Data Scraper can automate post extraction and analysis. It saves you time and effort.

Scraping LinkedIn posts is a game-changer for businesses seeking valuable insights and leads. With over 900 million users, LinkedIn holds a treasure trove of data waiting to be unlocked. In this step-by-step guide, we'll walk you through the process of extracting post content, author details, engagement metrics, and more.

You'll discover the best tools and techniques to scrape LinkedIn posts efficiently while respecting user privacy and avoiding detection. Whether you prefer the classic manual approach or the cutting-edge power of AI agents like Bardeen, this guide has you covered.

Get ready to take your LinkedIn data game to the next level and skyrocket your lead generation efforts!

Before diving into the process of scraping LinkedIn posts, it's crucial to understand the platform's data structure and policies. LinkedIn stores a wealth of information, including posts, comments, likes, and shares, which can provide valuable insights for businesses and researchers.

However, it's essential to approach LinkedIn scraping with caution and respect for user privacy. LinkedIn has specific policies in place regarding web scraping and data usage, and violating these guidelines can result in account restrictions or even legal consequences.

LinkedIn's data is organized in a hierarchical manner, with user profiles serving as the foundation. Each profile contains various data points, such as work experience, education, skills, and connections. Posts, comments, likes, and shares are associated with individual user profiles, forming a complex web of interactions.

Understanding this structure is crucial for effectively navigating and extracting relevant data from the platform. By targeting specific data points and relationships, you can gather meaningful insights while minimizing unnecessary data collection.

LinkedIn has strict policies regarding web scraping and automated data collection. The platform's terms of service explicitly prohibit the use of bots, scrapers, or other automated means to access and extract data without permission.

Violating these policies can lead to account suspension or termination, as well as potential legal action. It's crucial to review and comply with LinkedIn's terms of service and privacy policy before engaging in any scraping activities.

When scraping LinkedIn data, it's essential to prioritize ethical practices and respect user privacy. This includes obtaining consent where necessary, anonymizing personal information, and using the collected data responsibly.

Avoid scraping sensitive or private information, such as email addresses or phone numbers, without explicit permission. Focus on gathering publicly available data that aligns with your research or business objectives.

To effectively navigate the world of LinkedIn scraping, familiarize yourself with key terms such as API (Application Programming Interface), web scraping, and data extraction.

Understanding these concepts will help you make informed decisions when planning and executing your LinkedIn scraping projects.

By grasping LinkedIn's data structure, adhering to the platform's policies, and prioritizing ethical practices, you can effectively scrape LinkedIn with Bardeen while mitigating risks and ensuring compliance.

In the next section, we'll explore the essential tools and techniques for successful LinkedIn scraping, empowering you to gather valuable insights while respecting the platform's guidelines.

When it comes to scraping LinkedIn posts, selecting the appropriate tools is crucial for success. Various AI web scraping tools offer different features and capabilities. Understanding the pros and cons of each tool and their suitability for LinkedIn scraping will help you make an informed decision.

Additionally, using proxies and rotating IP addresses is essential to avoid detection and potential bans from LinkedIn. Specialized LinkedIn scraping tools like can streamline the process and provide targeted functionality for extracting data from the platform.

Scrapy, BeautifulSoup, and Selenium are among the most popular web scraping tools available. Scrapy is a powerful and flexible framework written in Python, ideal for large-scale scraping projects. BeautifulSoup, on the other hand, is a Python library that simplifies the parsing of HTML and XML documents, making it easy to extract specific data points.

Selenium is a browser automation tool that allows you to interact with web pages programmatically. It is particularly useful for scraping dynamic content and handling complex user interactions on LinkedIn.

When choosing a tool for LinkedIn scraping, consider factors such as ease of use, scalability, and compatibility with your programming language of choice. Scrapy is highly scalable and offers a wide range of features, but it may have a steeper learning curve compared to BeautifulSoup.

Selenium provides browser automation capabilities, which can be beneficial for navigating LinkedIn's dynamic content, but it may be slower compared to other tools. Assess your specific requirements and technical expertise to select the most suitable tool for your LinkedIn scraping project.

LinkedIn actively monitors and restricts scraping activities to protect user data. To avoid detection and potential bans, it is crucial to use proxies and rotate IP addresses during the scraping process. Proxies act as intermediaries between your scraping script and LinkedIn, masking your real IP address and making it appear as if the requests are coming from different sources.

Implementing IP rotation involves regularly switching between different proxy servers to distribute the scraping load and minimize the risk of being flagged by LinkedIn's anti-scraping mechanisms. This helps maintain the stability and longevity of your scraping operation.

In addition to general web scraping tools, there are specialized tools designed specifically for LinkedIn scraping. Phantombuster and TexAu are two popular options that offer a range of features tailored to extracting data from LinkedIn.

These tools often provide pre-built modules or templates for common scraping tasks, such as extracting profile information, post content, and engagement metrics. They may also include built-in proxy management and IP rotation functionality to simplify the scraping process and reduce the risk of detection.

Leveraging the right combination of tools and techniques is key to effectively scraping LinkedIn posts while minimizing the chances of being detected or banned.

Save time and reduce manual effort in LinkedIn scraping tasks. Use Bardeen's LinkedIn Profile Data Playbook to automate and integrate data directly into your workflow.

By carefully selecting the appropriate tools, utilizing proxies and IP rotation, and considering LinkedIn-specific solutions, you can optimize your scraping efforts and extract valuable data from the platform.

Get ready to dive into the nitty-gritty of LinkedIn post scraping! In the upcoming section, we'll walk you through a step-by-step guide on setting up your scraping environment, inspecting LinkedIn's HTML structure, and extracting post data using Python and your chosen tools.

Scraping LinkedIn posts involves setting up the scraping environment, inspecting the HTML structure, extracting relevant data, and saving the scraped information. This step-by-step guide will walk you through the process of scraping LinkedIn posts using Python and popular tools like Selenium and Beautiful Soup.

By following these steps, you'll be able to extract valuable data from LinkedIn posts, such as post content, author details, engagement metrics, and more. Let's dive into the details of each step.

Before you start scraping LinkedIn posts, you need to set up your scraping environment. This involves installing Python, setting up a virtual environment (optional but recommended), and installing the necessary libraries.

Make sure you have Python installed on your system. Create a virtual environment to keep your project's dependencies isolated. Then, install libraries like Selenium, Beautiful Soup, and Pandas using pip. These libraries will help you automate browser interactions, parse HTML, and handle data efficiently.

To effectively scrape data from LinkedIn posts, you need to understand the HTML structure of the page. Open a LinkedIn post in your web browser and use the browser's developer tools to inspect the HTML elements.

Identify the relevant elements that contain the data you want to extract, such as the post content, author name, date, likes, comments, and shares. Take note of the HTML tags, classes, and IDs associated with these elements. This information will be crucial when writing your scraping code.

With the scraping environment set up and the HTML structure understood, you can now write Python code to extract data from LinkedIn posts. Use Selenium to automate browser interactions, such as logging in to LinkedIn, navigating to the desired post, and scrolling to load all the content.

Once the post is fully loaded, use Beautiful Soup to parse the HTML and locate the specific elements containing the data you want to extract. Use methods like find() and find_all() to select elements based on their tags, classes, or IDs. Extract the text content or attributes of these elements and store them in variables or data structures.

LinkedIn posts are often paginated, meaning you may need to navigate through multiple pages to scrape all the desired data. Implement pagination handling in your scraping code by identifying the pagination mechanism, such as "Load more" buttons or infinite scrolling.

Use Selenium to click on these buttons or scroll the page to load additional posts. Repeat the data extraction process for each post on each page until you have scraped all the required data.

By following this step-by-step guide and leveraging the power of Python, Selenium, and Beautiful Soup, you can successfully scrape LinkedIn posts and extract valuable data for your projects.

Mastering LinkedIn post scraping opens up a world of possibilities for data analysis, market research, and gaining insights from the vast amount of information shared on the platform. For more on web scraping techniques, check out web scraper extensions that can simplify this process.

When scraping LinkedIn posts, it's crucial to follow best practices and tips to avoid detection, ensure data quality, and maximize the value of the scraped information. By implementing strategies such as randomizing scraping patterns, cleaning and preprocessing data, and exploring potential use cases, you can effectively leverage LinkedIn post data for various business purposes.

Let's dive into the key considerations and techniques for successful LinkedIn scraping while staying within ethical and legal boundaries.

One of the primary concerns when scraping LinkedIn posts is avoiding detection and potential bans. To minimize the risk of being flagged, it's essential to randomize your scraping patterns and introduce delays between requests.

Instead of scraping posts in a predictable or sequential manner, consider randomizing the order and timing of your requests. This helps simulate human-like behavior and reduces the chances of triggering LinkedIn's anti-scraping mechanisms. Additionally, incorporating random delays between requests further enhances the natural appearance of your scraping activity.

Once you have scraped LinkedIn post data, it's important to clean and preprocess the information to ensure its usability and reliability for analysis. Raw scraped data often contains irrelevant or inconsistent elements that need to be addressed.

Start by removing any HTML tags, special characters, or unwanted whitespace from the scraped content. Next, handle missing or incomplete data points by either removing them or applying appropriate imputation techniques. Standardizing date and time formats, handling outliers, and dealing with duplicate entries are also crucial steps in the data cleaning process.

As you scrape large volumes of LinkedIn post data, efficient storage and management become critical. Storing scraped data in a structured and organized manner facilitates easy access, querying, and analysis.

Consider using a database system like MySQL, PostgreSQL, or MongoDB to store your scraped data. These databases provide scalability, indexing capabilities, and support for complex queries. Alternatively, you can use file formats like CSV or JSON for smaller datasets or when database setup is not feasible.

Scraped LinkedIn post data opens up a wide range of possibilities for businesses and researchers. One prominent use case is sentiment analysis, where you can analyze the sentiment expressed in posts to gauge public opinion, track brand perception, or identify trends.

Another valuable application is trend detection. By analyzing the content and engagement metrics of LinkedIn posts over time, you can uncover emerging topics, popular hashtags, or influential voices within specific industries. This insights can inform content strategy, marketing campaigns, and competitive intelligence efforts.

By applying best practices and leveraging scraped LinkedIn post data effectively, you can gain valuable insights, make data-driven decisions, and stay ahead of the curve in your business or research endeavors.

Remember, while scraping LinkedIn posts can be a powerful tool, it's essential to respect user privacy, adhere to ethical guidelines, and comply with LinkedIn's terms of service. Always prioritize responsible and sustainable scraping practices to ensure the long-term viability of your data extraction efforts.

Congratulations on making it this far! Your dedication to mastering LinkedIn post scraping is truly commendable. Just imagine the endless possibilities and insights you'll unlock with your newly acquired skills. Don't let all this knowledge go to waste – put it into practice and become the LinkedIn scraping guru you were meant to be!

Maximize your productivity with the enrich LinkedIn profile tool from Bardeen. Save time by automating profile updates directly in Google Sheets and streamline your data processing.

Scraping LinkedIn posts is a valuable skill for businesses and professionals to gather insights and make data-driven decisions. In this comprehensive guide, you discovered:

Don't let your competitors outpace you in the data-driven world. Master the art of scraping LinkedIn posts today, or risk falling behind in the race for valuable insights! For broader data strategies, learn how to scrape Twitter to expand your data collection.

SOC 2 Type II, GDPR and CASA Tier 2 and 3 certified — so you can automate with confidence at any scale.

Bardeen is an automation and workflow platform designed to help GTM teams eliminate manual tasks and streamline processes. It connects and integrates with your favorite tools, enabling you to automate repetitive workflows, manage data across systems, and enhance collaboration.

Bardeen acts as a bridge to enhance and automate workflows. It can reduce your reliance on tools focused on data entry and CRM updating, lead generation and outreach, reporting and analytics, and communication and follow-ups.

Bardeen is ideal for GTM teams across various roles including Sales (SDRs, AEs), Customer Success (CSMs), Revenue Operations, Sales Engineering, and Sales Leadership.

Bardeen integrates broadly with CRMs, communication platforms, lead generation tools, project and task management tools, and customer success tools. These integrations connect workflows and ensure data flows smoothly across systems.

Bardeen supports a wide variety of use cases across different teams, such as:

Sales: Automating lead discovery, enrichment and outreach sequences. Tracking account activity and nurturing target accounts.

Customer Success: Preparing for customer meetings, analyzing engagement metrics, and managing renewals.

Revenue Operations: Monitoring lead status, ensuring data accuracy, and generating detailed activity summaries.

Sales Leadership: Creating competitive analysis reports, monitoring pipeline health, and generating daily/weekly team performance summaries.