Scraping dynamic web pages can be a daunting task, as they heavily rely on JavaScript and AJAX to load content dynamically without refreshing the page. In this comprehensive guide, we'll walk you through the process of scraping dynamic web pages using Python in 2024. We'll cover the essential tools, techniques, and best practices to help you navigate the complexities of dynamic web scraping and achieve your data extraction goals efficiently and ethically.

Understanding Dynamic Web Pages and Their Complexities

Dynamic web pages are web pages that display different content for different users while retaining the same layout and design. Unlike static web pages that remain the same for every user, dynamic pages are generated in real-time, often pulling content from databases or external sources.

JavaScript and AJAX play a crucial role in creating dynamic content that changes without requiring a full page reload. JavaScript allows for client-side interactivity and dynamic updates to the page, while AJAX (Asynchronous JavaScript and XML) enables web pages to send and receive data from a server in the background, updating specific parts of the page without disrupting the user experience.

Key characteristics of dynamic web pages include:

- Personalized content based on user preferences or behavior

- Real-time updates, such as stock prices or weather information

- Interactive elements like forms, shopping carts, and user submissions

- Integration with databases to store and retrieve data

Creating dynamic web pages requires a combination of client-side and server-side technologies. Client-side scripting languages like JavaScript handle the interactivity and dynamic updates within the user's browser, while server-side languages like PHP, Python, or Ruby generate the dynamic content and interact with databases on the server.

Setting Up Your Python Environment for Web Scraping

To start web scraping with Python, you need to set up your environment with the essential libraries and tools. Here's a step-by-step guide:

- Install Python: Ensure you have Python installed on your system. We recommend using Python 3.x for web scraping projects.

- Set up a virtual environment (optional but recommended): Create a virtual environment to keep your project dependencies isolated. Use the following commands:

python -m venv myenvsource myenv/bin/activate(Linux/Mac) or myenv\Scripts\activate(Windows)

- Install required libraries:

- Requests:

pip install requests - BeautifulSoup:

pip install beautifulsoup4 - Selenium:

pip install selenium

- Install a web driver for Selenium:

- Download the appropriate web driver for your browser (e.g., ChromeDriver for Google Chrome, GeckoDriver for Mozilla Firefox).

- Add the web driver executable to your system's PATH or specify its location in your Python script.

With these steps completed, you're ready to start web scraping using Python. Here's a quick example that demonstrates the usage of Requests and BeautifulSoup:

import requests

from bs4 import BeautifulSoup

url='https://example.com'

response=requests.get(url)

soup=BeautifulSoup(response.content,'html.parser')

# Find and extract specific elements

title=soup.find('h1').text

paragraphs=[p.text for p in soup.find_all('p')]

print(title)

print(paragraphs)

This code snippet sends a GET request to a URL, parses the HTML content using BeautifulSoup, and extracts the title and paragraphs from the page.

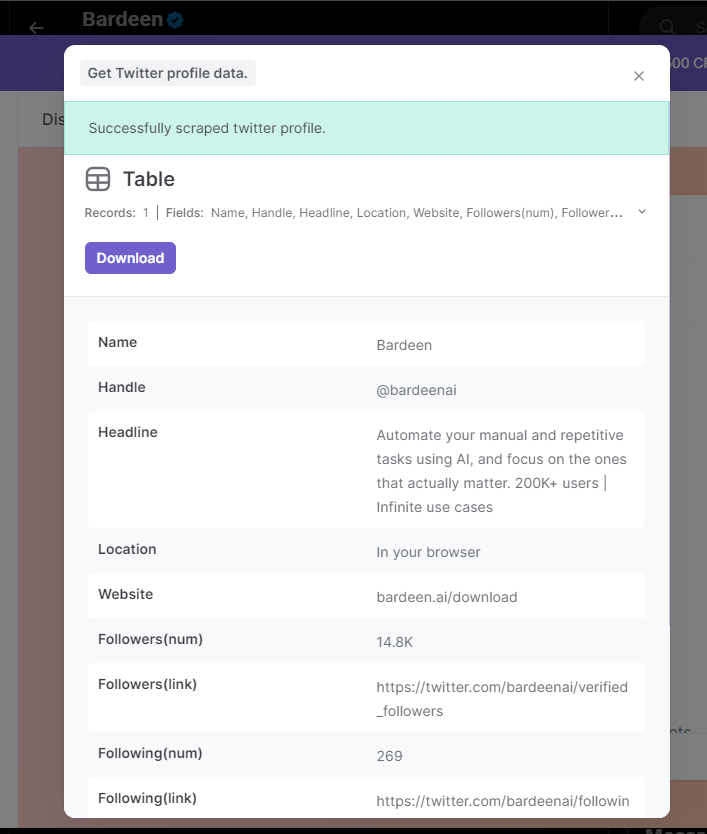

Want to make web scraping even easier? Use Bardeen's playbook to automate data extraction. No coding needed.

Remember to respect website terms of service and robots.txt files when web scraping, and be mindful of the server load to avoid causing any disruptions.

Utilizing Selenium for Automated Browser Interactions

Selenium is a powerful tool for automating interactions with dynamic web pages. It allows you to simulate user actions like clicking buttons, filling out forms, and scrolling through content. Here's how to use Selenium to automate Google searches:

- Install Selenium and the appropriate web driver for your browser (e.g., ChromeDriver for Google Chrome).

- Import the necessary Selenium modules in your Python script:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

- Initialize a Selenium WebDriver instance:

driver = webdriver.Chrome()

- Navigate to the desired web page:

driver.get("https://example.com")

- Locate elements on the page using various methods like

find_element() and find_elements(). You can use locators such as CSS selectors, XPath, or element IDs to identify specific elements. - Interact with the located elements using methods like

click(), send_keys(), or submit(). - Wait for specific elements to appear or conditions to be met using explicit waits:

wait = WebDriverWait(driver, 10)

element = wait.until(EC.presence_of_element_located((By.ID, "myElement")))

- Retrieve data from the page by accessing element attributes or text content.

- Close the browser when done:

driver.quit()

Here's a simple example that demonstrates automating a search on Google:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

driver = webdriver.Chrome()

driver.get("https://www.google.com")

search_box = driver.find_element(By.NAME, "q")

search_box.send_keys("Selenium automation")

search_box.send_keys(Keys.RETURN)

results = driver.find_elements(By.CSS_SELECTOR, "div.g")

for result in results:

print(result.text)

driver.quit()

This script launches Chrome, navigates to Google, enters a search query, submits the search, and then prints the text of each search result.

By leveraging Selenium's automation capabilities, you can interact with dynamic web pages, fill out forms, click buttons, and extract data from the rendered page. This makes it a powerful tool for web scraping and testing applications that heavily rely on JavaScript.

Advanced Techniques: Handling AJAX Calls and Infinite Scrolling

When scraping dynamic web pages, two common challenges are handling AJAX calls and infinite scrolling. Here's how to tackle them using Python:

Handling AJAX Calls

- Identify the AJAX URL by inspecting the network tab in your browser's developer tools.

- Use the

requests library to send a GET or POST request to the AJAX URL, passing any required parameters. - Parse the JSON response to extract the desired data.

Example using requests:

import requests

url = 'https://example.com/ajax'

params = {'key': 'value'}

response = requests.get(url, params=params)

data = response.json()

Handling Infinite Scrolling

- Use Selenium WebDriver to automate scrolling and load additional content.

- Scroll to the bottom of the page using JavaScript.

- Wait for new content to load and repeat the process until all desired data is loaded.

- Extract the data from the fully loaded page.

Example using Selenium:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver = webdriver.Chrome()

driver.get('https://example.com')

while True:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

try:

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, "div.new-content")))

except:

break

data = driver.page_source

By using these techniques, you can effectively scrape data from web pages that heavily rely on AJAX calls and implement infinite scrolling. Remember to be respectful of website owners and follow ethical scraping practices.

Want to automate data extraction from websites easily? Try Bardeen's playbook for a no-code, one-click solution.

Overcoming Obstacles: Captchas and IP Bans

When scraping dynamic websites, you may encounter challenges like CAPTCHAs and IP bans. Here's how to handle them:

Dealing with CAPTCHAs

- Use CAPTCHA solving services like 2Captcha or Anti-Captcha to automatically solve CAPTCHAs.

- Integrate these services into your scraping script using their APIs.

- If a CAPTCHA appears, send it to the solving service and use the returned solution to proceed.

Example using 2Captcha:

import requests

api_key = 'YOUR_API_KEY'

captcha_url = 'https://example.com/captcha.jpg'

response = requests.get(f'http://2captcha.com/in.php?key={api_key}\u0026method=post\u0026body={captcha_url}')

captcha_id = response.text.split('|')[1]

while True:

response = requests.get(f'http://2captcha.com/res.php?key={api_key}\u0026action=get\u0026id={captcha_id}')

if response.text != 'CAPCHA_NOT_READY':

captcha_solution = response.text.split('|')[1]

break

Handling IP Bans

- Rotate IP addresses using a pool of proxies to avoid sending too many requests from a single IP.

- Introduce random delays between requests to mimic human behavior.

- Use a headless browser like Puppeteer or Selenium to better simulate human interaction with the website.

- Respect the website's robots.txt file and terms of service to minimize the risk of IP bans.

By employing these techniques, you can effectively overcome CAPTCHAs and IP bans while scraping dynamic websites. Remember to use these methods responsibly and respect website owners' policies to ensure ethical scraping practices.

Ethical Considerations and Best Practices in Web Scraping

When scraping websites, it's crucial to adhere to legal and ethical guidelines to ensure responsible data collection. Here are some key considerations:

- Respect the website's robots.txt file, which specifies rules for web crawlers and scrapers.

- Avoid overloading the target website with requests, as this can disrupt its normal functioning and cause harm.

- Be transparent about your identity and provide a way for website owners to contact you with concerns or questions.

- Obtain explicit consent before scraping personal or sensitive information.

- Use the scraped data responsibly and in compliance with applicable laws and regulations, such as data protection and privacy laws.

To minimize the impact on the target website and avoid potential legal issues, follow these best practices:

- Limit the frequency of your requests to avoid overwhelming the server.

- Implement delays between requests to mimic human browsing behavior.

- Use caching mechanisms to store and reuse previously scraped data when possible.

- Distribute your requests across multiple IP addresses or use proxies to reduce the load on a single server.

- Regularly review and update your scraping scripts to ensure they comply with any changes in the website's structure or terms of service.

Want to make web scraping easier? Use Bardeen's playbook to automate data extraction. No coding needed.

By adhering to these ethical considerations and best practices, you can ensure that your web scraping activities are conducted responsibly and with respect for website owners and users.

Scraping dynamic web pages, which display content based on user interaction or asynchronous JavaScript loading, presents unique challenges. However, with Bardeen, you can automate this process efficiently, leveraging its integration with various tools, including the Scraper template. This automation not only simplifies data extraction from dynamic pages but also streamlines workflows for data analysis, market research, and competitive intelligence.

- Get web page content of websites: Automate the extraction of web page content from a list of URLs in your Google Sheets, updating each row with the website's content for easy analysis and review.

- Get keywords and a summary from any website save it to Google Sheets: Extract key data points from websites, summarize the content, and identify important keywords, storing the results directly in Google Sheets for further action.

- Get members from the currently opened LinkedIn group members page: Utilize Bardeen’s Scraper template to extract member information from LinkedIn groups, ideal for building targeted outreach lists or conducting market analysis.

By automating these tasks with Bardeen, you can save significant time and focus on analyzing the data rather than collecting it. For more automation solutions, visit Bardeen.ai/download.

.svg)

.svg)

.svg)